AI's Energy Future: A Follow-up

Will efficiency gains and economic constraints prevent an AI-driven energy crisis?

Two weeks ago, I wrote about how AI agents might consume far more energy than current estimates suggest. I painted a scenario where each of us would have multiple AI agents working around the clock, potentially consuming enormous amounts of energy. The implications are concerning: countries with more limited energy resources might find themselves at a severe disadvantage, unable to access the same level of AI capabilities as energy-rich nations. Energy constraints would equal intelligence constraints.

Several readers pushed back on this scenario. Their counterarguments were thoughtful and worth exploring in more detail. While my original scenario is just one possible future, understanding the arguments both for and against it can hopefully help us think more clearly about this issue.

Point 1: New technologies almost never end up using that much energy

In my original post, I noted that AI models consume large amounts of energy. While we’ll likely find ways to make them more energy-efficient in the future, I believe these gains will be offset by models growing larger or using more infrastructure. That’s why I based my calculations on an average energy use of 1 Wh per request.

Several people pointed out that the field has made far greater efficiency gains than I assumed, meaning I may have overestimated energy needs. They cited figures showing models now use 100 to 1,000 times less energy per request for the same performance compared to a few years ago. History also offers examples where new technologies were expected to consume over 10% of global energy, but efficiency improvements prevented that from happening.

This is a compelling point - humans have consistently found ways to innovate around resource constraints. However, I believe we shouldn’t underestimate energy needs per request for two reasons.

First, as mentioned earlier, I believe most efficiency gains will quickly be offset by the development of larger and more advanced models. Even if models don’t grow much bigger, the way they handle requests will likely become more compute-intensive. A key example is OpenAI’s o1 model. Its main advancement isn’t size but its “chain of thought” approach, where it generates intermediate reasoning steps sequentially, allowing it to solve complex problems by breaking them down into smaller, logical components. This process takes substantially more computation and, therefore, more energy than a standard model. I expect similar trends to continue in the future.

Second, while I’m impressed by the efficiency gains of recent years, I’m cautious about projecting them too far into the future. Take the often-quoted figure of 0.3 Wh per Google search, a number that is actually outdated, dating back to 2009. More recent estimates suggest it’s about 10 times lower today. This demonstrates ongoing efficiency improvements but also highlights that it took Google 14 years to achieve a 10x gain - far slower than the 100–1,000x improvements mentioned earlier. At some point, all the easy gains will have been exhausted. Additionally, future AI agents won’t just run model inference; they’ll likely make numerous requests to multiple search engines and databases in parallel, contributing to overall energy use.

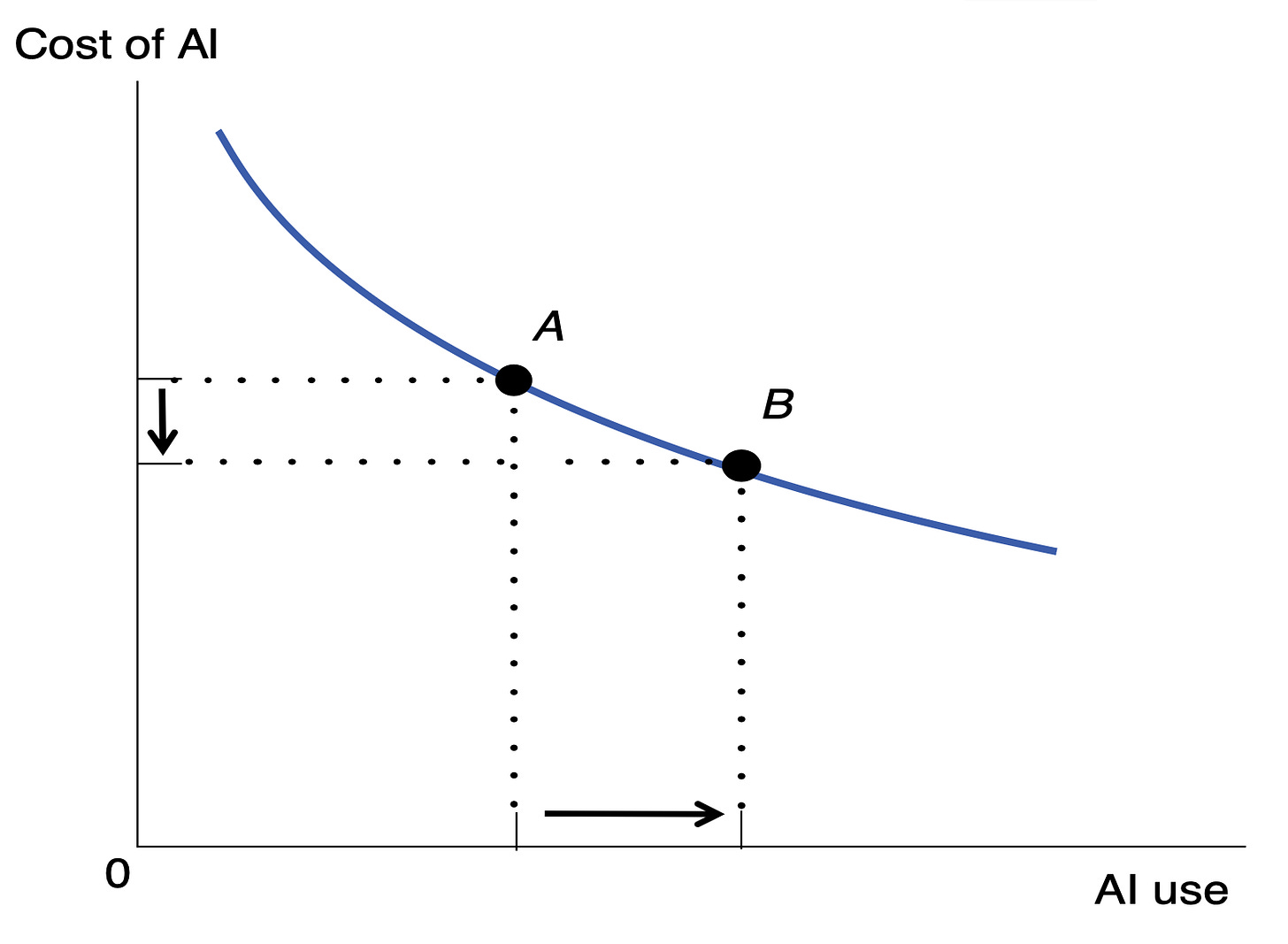

In other words, while I fully agree that we’ll make AI models more efficient for the same output, I also believe the output won’t remain static, and we’ll just keep raising the bar as models improve. This rebound effect is well known in economics and can sometimes overcompensate, leading to greater demand that outweighs efficiency gains. This is known as Jevons’ paradox, where improving resource efficiency ends up increasing overall resource demand instead of reducing it.

Point 2: The scenario is economically not possible

Another counterargument is that even if energy demands per request stay high, the scenario is implausible because it wouldn’t be economically viable. My calculations assumed 10,000 requests per person per day - equivalent to each active agent making 1,000 requests daily. At 1 Wh per request, this adds up to 10 kWh per day. For context, the average person in a high-income country consumes about 100–200 kWh of energy daily, with about 20% coming from electricity. An extra 10 kWh would be a substantial increase, but not entirely unreasonable.

Current electricity costs average around 0.2 USD or EUR per kWh - likely slightly overestimated for the US and underestimated for Europe, but a reasonable average. This means the projected energy costs per person for 10 agents in my scenario would be about 2 USD per day, or 60 USD per month, totaling 720 USD per year. With a billion customers, this amounts to nearly a trillion-dollar industry. The last time we saw such volumes from a new activity was with the rise of the internet, e-commerce, or mobile phones, i.e. developments that took decades. Moreover, 720 USD would represent a sizeable portion of disposable income for most people.

While I believe these numbers are accurate, I don’t think they make the scenario economically impossible. It’s true that this would represent a major shift in spending, but that’s exactly the premise of the scenario - it assumes the agents are so useful that people would willingly pay this amount.

It’s possible that the economic tradeoff won’t make sense, i.e. agents will be considered too expensive, and people won’t spend their money on them. In that case, we arrive at my worst-case scenario, where people choose to be intelligence-constrained because they can’t afford the energy. Alternatively, the energy market might adapt to this demand, leading to cheaper electricity, in which case the scenario would become economically viable, but this would require a massive and rapid build-out of energy production.

Point 3: Agents are not that useful

The entire discussion hinges on the assumption that agents will become so useful that people will willingly spend a major portion of their disposable income on them. Whether this scenario comes true is still uncertain and remains hypothetical for now.

That said, there are good reasons to believe it might happen. Who wouldn’t want one or more agents to gather information or perform tasks on their behalf? It’s like having personal assistants. The main limitation today is that AI models can’t really interact with the world - even the digital world - and can only retrieve information. But this is changing quickly. Both OpenAI and Anthropic have recently introduced models capable of interacting with computer interfaces, for example. While still clunky, it’s easy to imagine these capabilities improving dramatically in the near future.

In addition, the orchestration of AI models is still in its infancy (e.g., LangChain, Griptape, CrewAI, Autogen). As this infrastructure matures, building multi-agent systems will become much easier, enabling them to handle increasingly complex tasks - including ones we can’t yet imagine. This progression mirrors the dynamics of other technological breakthroughs: it’s hard to foresee their full potential at first. Early on, people focus on obvious use cases, often by replacing existing processes with ones where the technology plays a key role. For example, newspapers going online or web services adding mobile apps. However, the biggest winners are usually ideas that wouldn’t have made sense before the technology existed. Google, for instance, was unimaginable before the internet. Social media didn’t exist pre-internet. With mobile technology, services like Uber only became viable with smartphones. Similarly, it’s almost certain the most transformative AI use cases are yet to emerge.

Lastly, I think agents will become ubiquitous because dealing with information on the web is such a hassle. Have you ever tried to buy something and ended up frustrated by the process? It’s a mess. Finding the right information is hard enough with all the ads bombarding you. Even when you find a decent website, they often try to upsell you things you don’t need. Booking a flight, for example, always feels like I’m being taken advantage of. Prices fluctuate based on the time of day, your location, or even the device you’re using - booking with a Mac or iPhone can demonstrably raise prices. My human brain is no match for the algorithms I’m up against. So why should I fight this battle myself? I want an agent to negotiate for me, armed with my preferences, unlimited time to browse, and the ability to test countless scenarios across hundreds of websites.

I thus believe that agents could be very useful in our daily lives. I haven’t even touched on those I would use for work, but the same reasons discussed above apply here as well. These agents could be just as impactful and even directly economically necessary.

Point 4: If agents end up being so useful, we’ll have bigger problems

This brings us to the fourth point: if agents become so useful, humans could soon be outcompeted in the job market, leading to widespread unemployment and societal unrest. In that case, energy would be the least of our concerns.

While I understand the basis of this counterargument, I don’t agree with it. I firmly believe human creativity and our drive to do more are limitless. Whatever AI automates, we’ll find new, more challenging tasks to tackle. Historically, new technologies have always created more jobs than they’ve replaced. Things might be different with AI, but I see no strong reason to believe they will be. There are challenges with the speed of these changes, but I doubt the underlying dynamics will fundamentally shift.

Even if massive unemployment became an issue, it wouldn’t eliminate the energy concerns. Some form of the argument assumes that if AI agents become powerful enough to handle jobs for us, they will also be intelligent enough to solve the energy problem. I don’t agree with that. Building multi-agent systems capable of performing many useful tasks doesn’t mean creating an AI that can solve fusion. We may get there eventually, but my sense is that energy challenges will become pressing within the next two to three years.

That said, I very much value all the counterarguments and appreciate the discussion. It’s clear that this topic will remain fascinating!

CODA

This is a newsletter with two subscription types. I highly recommend to switch to the paid version. While all content will remain free, I will donate all financial support to the EPFL AI Center.

To stay in touch, here are other ways to find me:

Social: I’m mainly on LinkedIn but also have presences on Mastodon, Bluesky, and X.

Writing: I infrequently write on another Substack on digital developments in health, called Digital Epidemiology.