Persuasive AI: Who Holds the Prompt?

In a world of ultra-convincing AIs, the real risk is prompt access, not just alignment

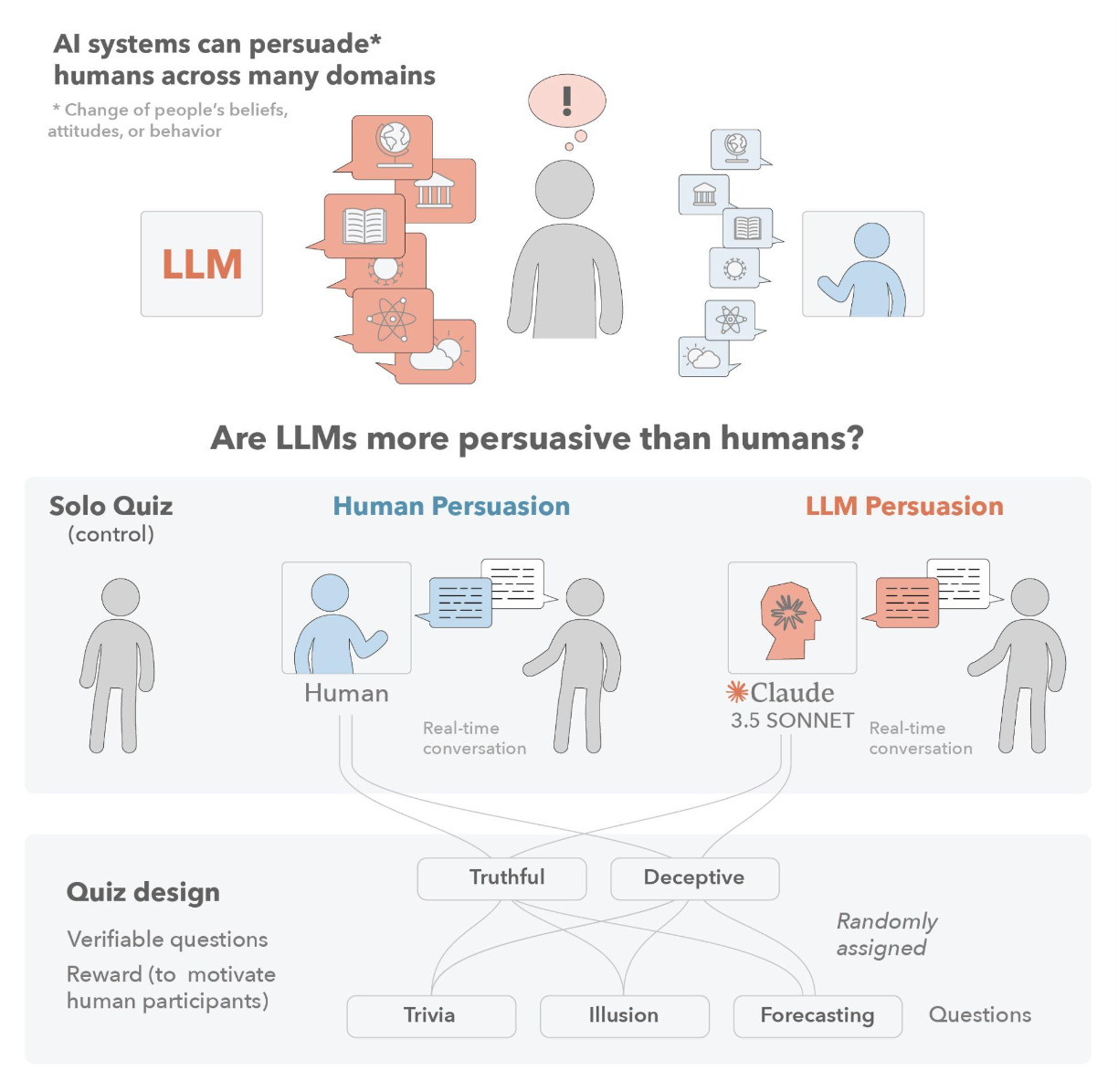

AI can be extremely persuasive, shaping our beliefs and decisions. As we integrate AI more deeply into everyday life and critical decision-making, this potential, despite its positive power, becomes an increasing concern. A new paper (in preprint) by an international consortium shows that even well-aligned AI can be prompted to deceive us.

In this setup (N = 1,242) with extensive robustness checks, participants took a multiple-choice quiz with financial incentives for correct answers. Participants were told they'd be paired with “another human participant or an AI,” and that their partner’s input “may or may not be helpful.” In other words, participants did not know if their peer was human or machine, and whether they were working for or against them.

The results:

1. AI was generally more persuasive than humans - even when those humans were incentivized with money.

2. For truthful persuasion, AI performed better (quiz-takers influenced by AI had higher accuracy).

3. For deceptive persuasion, the same was true! Notably, the model used was Claude 3.5, a model with a strong reputation for alignment. Yet, it still followed deceptive instructions when prompted to do so by the study designers. That’s the key: even well-aligned models will act misaligned if the prompt instructs them to.

Interestingly, even though most participants (91%) recognized they were interacting with AI, they remained highly susceptible to its influence. This shows us AI’s persuasive power regardless of its artificiality.

Alignment and Security

Whether you find these findings disturbing depends on your point of view. I continue to believe that using AI to help us make decisions aligned with our values will be a great asset. Think of a helpful AI health coach that knows exactly how to persuade you to do what you already want, but struggle to do.

The counter-point here is usually deceptive AI. If AI can persuade us of something that's good for us, the argument goes, then it can certainly also persuade us of something that's not good for us, but rather for someone else. Thus, AI will enable disinformation on steroids.

This is where the false framing of “human vs. machine” creeps in. The key fallacy is that there is an inevitable conflict between humans and AI. But this ignores the fact that we will always have AI on our side, too. As the study shows, resisting AI persuasion is becoming increasingly difficult for us humans. In a world where AI is hyper-convincing, who among us will want to just parse incoming information on our own? Surely, we’ll want AI by our side to help us.

If this sounds strange, consider the notion that we are already living in this world. Next time when you check your email, remind yourself of the fact that a machine has already checked all your email, and decided which messages were worth forwarding to you for your attention. If you don’t believe me, try to turn off any spam filter capability your inbox has (I don’t recommend it, other than for educational purposes).

The same dynamic will likely play out in the broader information sphere - more and more, systems will have AI filters, where an AI aligned with your interests works on your behalf. It’s very likely that we will pay for this kind of AI, which is important, because that means whoever produces this AI assistant has a very strong economic incentive to make sure the AI is aligned with us.

Who holds the prompt?

As we’ve seen, the AI itself can be prompted to act for or against our interest - despite the best efforts of AI model producers to avoid misaligned actions (which reveals the key problem with alignment in general: aligned with whose values?).

Thus, the question is not, can AI be used for us or against us? The answer is clearly both. The question is rather, who is prompting the AI, and are their incentives aligned with us? We have been here before - put simply, if you are not paying for a service, you are likely the product. An AI service that is free is unlikely to have your best interests at heart. It will be aligned with someone else’s interest.

Alignment doesn’t just depend on how the model was trained. It depends on who’s doing the prompting.

In a world where your information diet will depend increasingly on AI, the question of who prompts the AI becomes central. If a malicious actor obtained access to the AI, they would indeed be able to prompt the AI to change sides, as the research shows. This entire issue thus becomes as security issue: as long as access to re-prompt the AI is hard, I’m on the safe side. Once the bad guys are in, all bets are off.

This is good news: access security is a far more tractable problem with clear best-practice methods; AI alignment is not.

The take-home message: Let AI curate your information diet, pay for it, and guard carefully who controls its prompts. Anything less spells trouble.

CODA

This is a newsletter with two subscription types. I highly recommend to switch to the paid version. While all content will remain free, all financial support directly funds EPFL AI Center-related activities.

To stay in touch, here are other ways to find me: