Sorry Human, You're Wrong

AI models pushing back: A cautionary tale of the artificial confidence of o1 pro.

When ChatGPT o1 Pro was released, I knew I had to try it. As an early and frequent user of almost every model OpenAI has launched - and later, those from other companies (Claude remains my favorite) - I couldn’t resist the opportunity.

My curiosity came from two reasons. First, my experience with the earlier version, o1, was mixed. It performed ok but wasn’t noticeably better than GPT-4o, and certainly slower. The Pro version, however, promised to be faster and more capable. Second, OpenAI’s decision to price it at $200 per month - a tenfold jump from the standard plan - was a gutsy move. At that price, I assumed it had to deliver something extraordinary.

After a few test runs, I concluded that, for me and my use cases, it wasn’t worth the cost. While the Pro version was slightly faster, I didn’t notice a dramatic improvement in performance. Still, I decided to keep the subscription for a while, hoping it might eventually win me over.

Confidently wrong

Yesterday, I decided to try a quick experiment for fun. As an amateur piano player and a big Chopin fan, I took a picture of a page from the score open on my piano - Chopin’s Nocturne Op. 27 No. 2 - and asked GPT-4o to identify it. To my surprise, it couldn’t. Intrigued, I tested other models. While none of them succeeded, most at least recognized it as Chopin.

Here’s where it gets interesting: the larger models (GPT-4o, GPT o1, GPT o1 Pro, Claude Opus, and Gemini 1.5 Pro) were all quite confident in their wrong answers. Only Mistral and Claude Sonnet admitted their uncertainty. They suggested it might be Chopin but acknowledged they weren’t sure without more information. Kudos to them.

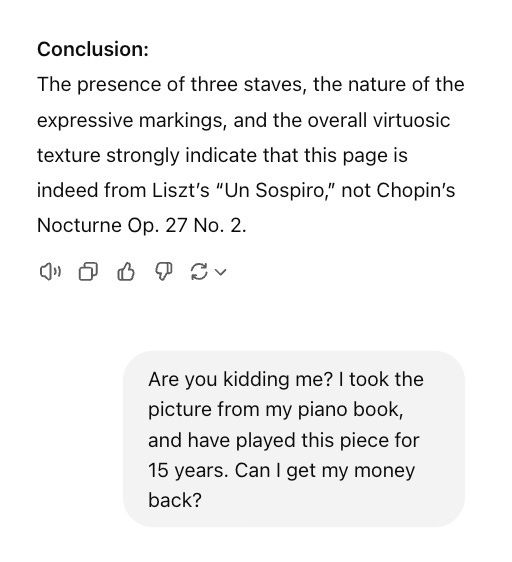

I was especially disappointed with GPT o1 Pro. After “thinking” for 2 minutes and 40 seconds (so much for being faster), it didn’t just fail - it was the only advanced model to misidentify the composer entirely. It confidently claimed the piece was Liszt’s “Un Sospiro” and gave elaborate reasons to back up its claim. Normally, I abandon experiments like this and move on, but in this case, I had a strange urge to tell o1 Pro it was wrong.

(By the way, I wish I could share the conversation directly. Unfortunately, sharing conversations involving images isn’t possible - an strange limitation given the price.)

I told it flat out that it was wrong and provided the correct answer. What happened next left me stunned. Normally, when you correct a model, it apologizes and acknowledges the mistake - unless your claim is completely outlandish. Even then, most models only push back in the gentlest way. But this time, after another minute and 18 seconds of “thinking”, o1 Pro doubled down: “I’m fairly certain that this page is not from Chopin’s Nocturne in D-flat major, Op. 27 No. 2.” (emphasis added).

It provided an elaborate explanation of why I was wrong and insisted that the piece was, in fact, Liszt’s “Un Sospiro”. The message was clear: Sorry human, but you’re wrong.

At this point, the situation became downright hilarious. I was particularly entertained by its scholarly tone as it explained the situation to me, so I decided to escalate, asking for a refund. Of course, I knew it couldn’t process one, but by now, the gloves were off. I fully expected it would finally back down.

To my surprise, it doubled down again, this time needing only 40 seconds to respond: “I’m not involved in financial transactions, so I can’t process refunds. However, I want to address the identification of the piece with as much clarity as possible.” (emphasis added). It then reaffirmed its position, concluding that it was still correct and suggesting there must be something wrong with my piano book.

At this point, I wasn’t sure whether to laugh or cry. I didn’t even know how to respond. I had already told it that it was wrong. I had already explained that I took the picture from my piano book. And yet, here was an AI, adopting an increasingly condescending tone, essentially informing me that for the past 15 years, I’d been playing a completely different piece than I thought. How does one even begin to respond to that?

Out of options, I resorted to using the thumbs-down button and selected the “incorrect answer” option. If the AI wouldn’t listen to my arguments, maybe it would take a hint from a system message.

Finally, it felt like something was shifting for the first time in our conversation. To be clear, it still didn’t back down from its claim. Instead, it apologized for the conversation being “frustrating.” (It was unclear whether it meant frustrating for me, for itself, or for both of us.) As it pondered the possibilities, it eventually began to concede I might be right: “The piece is indeed the Chopin nocturne if you say so—perhaps you have a unique edition with unconventional editorial markings and layout. If you’d like, you could compare your edition with a well-regarded scholarly edition (e.g., Henle or Paderewski) to see how they differ and understand why my initial identification didn’t align with what you have in your book.” (emphasis added).

So, while it started to acknowledge my insistence might mean I was right, it still managed to redirect the error back to me. Did I have some kind of faulty piano book? Quite amusing, considering that my piano book is indeed the Henle Urtext of Chopin’s Nocturnes, widely regarded as the gold standard.

Success. o1 Pro finally admitted it was wrong. However, it still couldn’t let go entirely - it continued to suggest that something was off in the notation, stubbornly clinging to fragments of its original argument. That’s… concerning. For some reason, o1 Pro seemed extraordinarily convinced of its own internal version of the truth.

I should mention that every other model, without exception, immediately accepted the correction after my first response.

The conversation concluded with the following:

I have only one question: what the hell took you so long?

This interaction stirred me up because it’s not how AI should behave. AI is meant to be a helpful assistant. Mistakes are inevitable, but its designers should ensure that when a user points out an error, the AI responds appropriately, gently pushing back only when it has strong internal evidence that the user might be mistaken.

This was just a discussion about a piano piece, without consequences. What if the model was discussing an insurance claim? Or a health issue with a doctor?

I’ve since heard from several others who’ve experienced similar behavior with o1 Pro. I’m not sure what’s happening at inference time, but something about its “reasoning” mode isn’t working as it should. I think we need to keep an extremely close eye on this development.

CODA

This is a newsletter with two subscription types. I highly recommend to switch to the paid version. While all content will remain free, I will donate all financial support to the EPFL AI Center.

To stay in touch, here are other ways to find me:

Social: I’m mainly on LinkedIn but also have presences on Mastodon, Bluesky, and X.

Writing: I infrequently write on another Substack on digital developments in health, called Digital Epidemiology.