Weekend Read in AI - #4

OpenAI’s Deep Research and Google’s Gemini 2: Two AI releases shaking things up - plus a medical AI study with big implications.

Among the many announcements this week, two stood out to me: OpenAI’s Deep research agent - an AI that synthesizes large amounts of online information and completes multi-step research tasks - and Google’s long-awaited release of the Gemini 2 model family. Both will have lasting implications, but for different reasons.

Deep research

Let’s start with Deep research, OpenAI’s new agent for gathering and synthesizing information. You’ve probably come across the idea of AI agents before: AI tools that autonomously perform specific tasks in the background. Many AI providers have announced frameworks for building such agents, but these have remained abstract for most people. The use cases aren’t always clear, and the tools are still clunky. Deep research, however, is a concrete and accessible example of an AI agent: you give it a research task, and it gathers the information for you.

This idea itself isn’t new. Google had a similar agent some time ago. But once again, OpenAI has nailed the implementation and user experience, which is likely why Deep research has generated such buzz. I had the chance to use it myself, and I have to say it’s really good.

If you’ve read the various takes on Deep research, you’ve probably noticed mixed reactions. Some people love it 🤩 - others aren’t as impressed 🥱. I think this largely comes down to what you’re comparing it against.

For example, if I ask it for information on a topic where I have little expertise, I find the answers extremely helpful. Is everything 100% correct? Are all the details included? Probably not. But if my goal is to move from beginner level to reasonably advanced, it’s perfect.

On the other hand, if I ask it about a field where I’m highly knowledgeable - my daily research work, for instance - I’m bound to be underwhelmed. It won’t capture all the nuances, and as an expert, I might dismiss it as unhelpful. But that’s true for any broad information source.

Think about how people often criticize the media: “Whenever I read about a topic I know well, I notice inaccuracies and missing details.” Yet, those same people continue to rely on the media for areas where they lack expertise. This phenomenon even has a name: the Gell-Mann amnesia effect. According to Wikipedia:

“The Gell-Mann amnesia effect is a cognitive bias describing the tendency of individuals to critically assess media reports in a domain they are knowledgeable about, yet continue to trust reporting in other areas despite recognizing similar potential inaccuracies.”

I’m not sure I fully agree with the “amnesia” part of this critique. Yes, when I read about a topic I know deeply, I may be highly critical because I understand the nuances that a journalist had to simplify or omit. But why should that lead me to distrust reporting on other topics where I’m not an expert? The role of reporting isn’t to provide exhaustive detail; it’s to get me up to speed efficiently in areas that I’m not an expert in.

The same applies to Deep research. If you’re not an expert in a field, it can get you quite far, quite fast. And since most of us are not experts in most things, this makes it an incredibly useful tool. I highly recommend checking it out - though I recognize that its current $200/month price tag might be a dealbreaker for many.

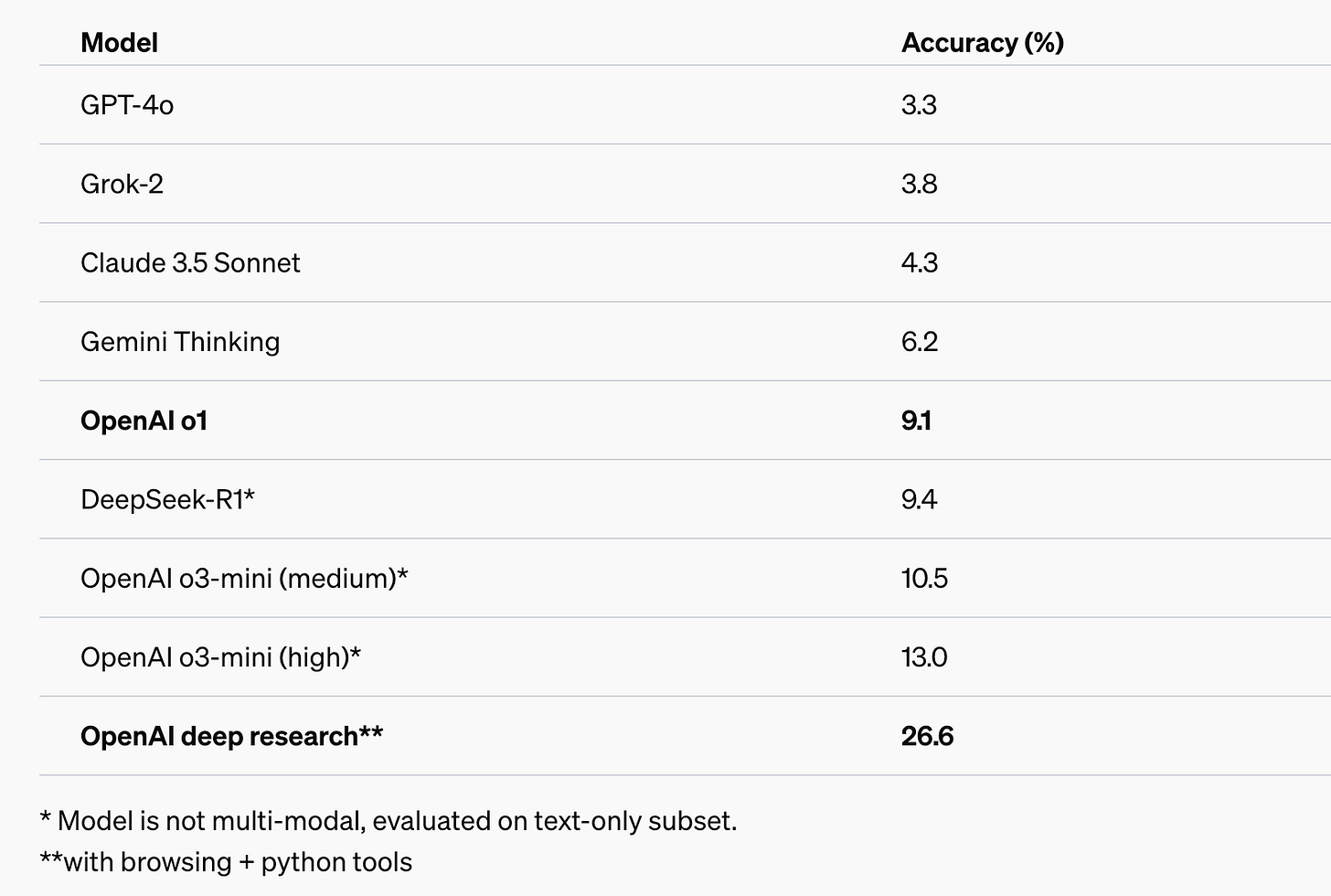

Let’s also not forget that Deep research recently topped “humanity’s last exam”. The comparison may not be entirely fair, given that not all models have browsing and coding capabilities like Deep research, but still - it’s impressive how well this agent is performing at the cutting edge of human knowledge.

Gemini 2

The other big development this week: Google released the Gemini 2 model family.

You might be tempted to shrug and think, another day, another AI model. But there are two key reasons why this release matters.

First, the Gemini 2 models have been out in experimental mode for a while - and they’ve topped multiple AI benchmarks (see e.g. lmarena.ai). So we’re not just talking about another “wannabe” entrant into the model game. They’re the leading models in many areas, making them impossible to ignore.

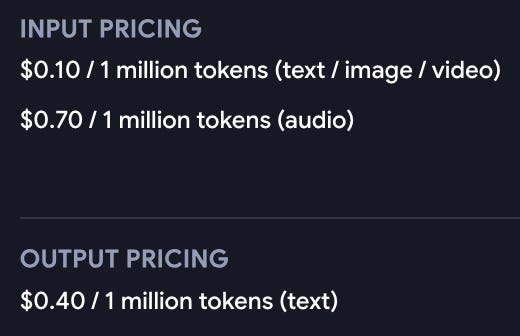

Second, there’s an economic angle. Google has made these models available via API at a highly competitive price point, at least an order of magnitude cheaper than OpenAI’s GPT-4 API.

To be clear, this isn’t just a money saver - it’s a game changer because many AI applications have struggled with business viability, especially those where users quickly rack up expensive API costs. Now, entirely new business models become feasible.

We can thus expect two things:

1. A surge of new AI applications entering the market, enabled by this lower-cost access of high quality models.

2. A further drop in AI pricing across other models - because without a comparable price cut, competitors simply won’t be viable anymore.

Exciting times ahead!

Varia

A couple of other things caught my attention this week. OpenAI also released new models: o3-mini. This is the next-generation reasoning model from OpenAI, and it’s supposed to be quite powerful. But so far, I must say I’m underwhelmed. For everyday use, I’m not seeing a massive improvement over GPT-4 or Claude. Maybe it shines in coding or math reasoning, but since I mainly use AI as a chatbot, I haven’t noticed a big leap. That said, I’m eager to see what happens when the full o3 model is released.

Another development that caught my attention is a fascinating new study in Nature Medicine. This was a randomized controlled trial on medical decision-making, comparing three groups: Physicians only; Physicians with AI (GPT-4); and AI only (GPT-4). The results: AI-only and AI-assisted physicians performed similarly, while physicians alone performed worse.

Some have taken this to mean that AI-only medicine is the future, given that AI can match doctors today, it’s cheaper, and it will only improve over time. But that view ignores many essential aspects of human involvement in medicine. For me, the biggest takeaway is that doctors with AI outperform doctors without it. This isn’t the first study to show that, and it won’t be the last - but it raises an interesting question:

Will we reach a point where a physician working without AI is seen as problematic?

We’ll have to wait and see. But the implications ahead - societal, ethical, and legal - are fascinating.

CODA

This is a newsletter with two subscription types. I highly recommend to switch to the paid version. While all content will remain free, all financial support directly funds EPFL AI Center activities.

To stay in touch, here are other ways to find me:

Social: I’m mainly on LinkedIn but also have presences on Mastodon, Bluesky, and X.

Podcasting: I’m hosting an AI podcast at the EPFL AI Center called “Inside AI” (Apple Podcasts, Spotify), where I have the privilege to talk to people who are much smarter than me.

Conferences: I’m an organizer of AMLD, the Applied Machine Learning Days - our next large event, AMLD 2025, takes place on Feb 11-14, 2025, in Lausanne, Switzerland.